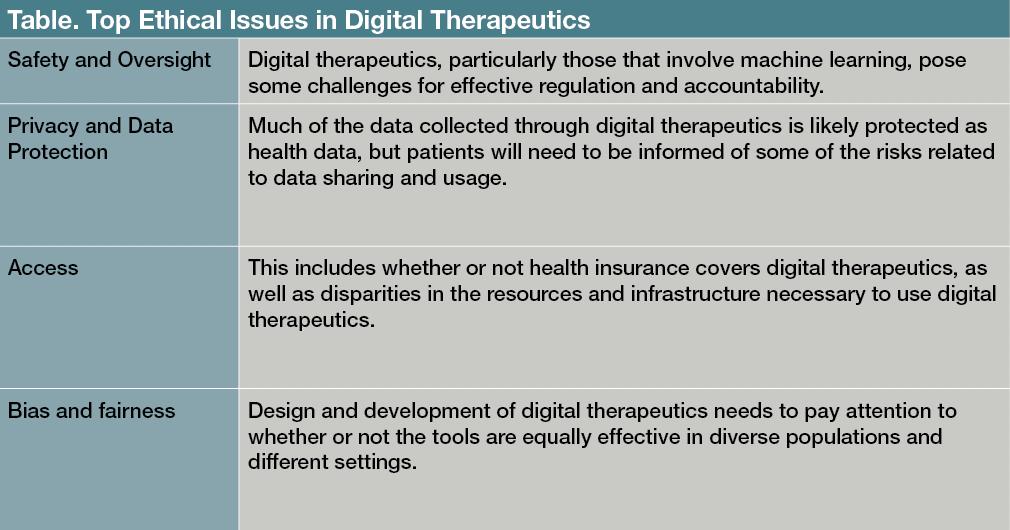

As digital therapeutics become more popular, it is important to consider how they can be integrated into mental health care in an ethical manner. The path forward requires continued attention both to appropriate oversight and models of care, and to issues of data protection and justice.

Safety and Oversight Issues

The primary ethical concerns for digital mental health technology have been safety, accountability, privacy, data protection, transparency, consent, and bias and fairness (

Table

).

1-3

Many consumer mental health apps are not regulated, and there have been

related concerns regarding the lack of evidence base

for consumer mental health apps.

4

Digital therapeutics are regulated as medical devices, and so the United States Food and Drug Administration (FDA) provides oversight of safety and effectiveness. However, formulating and implementing quality control measures for algorithms used in digital therapeutics remains challenging, as does evaluating the necessary external elements (ie, operating systems or connectivity) for providing digital therapeutics. Many digital therapeutics are meant to evolve continuously, which may mean that they need to be re-evaluated after an initial certification.

The

FDA’s Digital Software Pre-Certification

program certifies companies that are found to have “a robust culture of quality and organizational excellence,” and then gives them a streamlined process for product approvals.

5

This program is meant to address the challenges of regulating digital tools, but it has been criticized for providing less stringent standards than those used for pharmaceuticals (eg, lacking clarity regarding evaluation criteria and a complete definition of excellence). Critics have also called for

improved accountability for maintaining standards

.

6

The regulation of medical devices in the United States and Europe focuses on the product: the digital tool itself. However, it is important to recognize that a digital tool will be used within the context of a health delivery system, for purposes and goals specified within that system, perhaps as part of a plan for allocating available resources or for treating a particular patient population. Therefore, in order to adequately assess the safety and efficacy of a digital tool,

a systems view regarding how that tool will be used

is also necessary.

7

Digital tools that rely on machine learning present additional challenges for regulation. With

machine learning algorithms

, it can be difficult to determine why specific data inputs led to a certain output or findings.

8

Consequently, it can be hard to evaluate and address systematic problems in the outputs, such as

biases that disproportionately impact different populations

.

9,10

There are

efforts to develop algorithms

that are more explainable, but best practices for identifying and addressing potential biases are still evolving.

11

There have been calls for more transparency in health algorithms, such as developers allowing for third-party review of algorithms. It is also important for clinicians to carefully consider how to convey the risks and limitations of digital therapeutic tools to patients for informed consent purposes. Clinicians themselves may need training to understand the potential limitations of these digital tools. Involving relevant stakeholders, from clinicians to patients and community members, in plans for adoption and implementation of digital therapeutics in a health care system, can also be helpful for addressing fairness concerns.

Privacy and Data Protection

Mental health data are widely

viewed as more sensitive and potentially stigmatizing

than other health data.

12

Last year, a data security flaw in

a popular psychotherapy app in Finland was exploited by hackers

, who then blackmailed thousands of users over their personal data.

13

This incident highlighted both the value of behavioral information and the importance of strong data security measures. In order to facilitate telehealth during the pandemic, the Office of Civil Rights at the Department of Health and Human Services altered the

Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule

to eliminate penalties for violations made in the good faith provision of telehealth.

14

Although this provision and leniency is meant to end with the pandemic, there will be continuing tension between the accessibility afforded by digital technology, the potential exposure of patient data through these tools, and appropriate balancing of accountability and liability concerns.

Data gathered through digital therapeutics would generally be subject to HIPAA, which

establishes protections for health information used by covered entities

(ie, health care providers, health plans, and health care clearinghouses).

15

The

Health Information Technology for Economical

and Clinical Health Act further requires business associates of a HIPAA-covered entity comply with the HIPAA Security Rule.

16,17

There have been some incidents where business associates did not

adequately protect personal data

.

18

Digital therapeutics that are prescribed by covered entities should have business associate agreements in place with the digital therapeutic company and its associates that include provisions for compliance.

Data brokerage is a $200 billion industry; thus, the current landscape of data brokerage and sharing presents

additional concerns for the protection of patient data

.

19

Computer analytics

make it possible to draw behavioral health inferences from seemingly unrelated information (ie, location data), and these inferences can lead to negative ramifications for patients (ie, higher insurance rates or employment discrimination).

20-23

Although only

de-identified data

(data from which 18 specific identifiers including name and age have been removed) may be shared without restriction under HIPAA,

24

advances in computing and the availability of large public databases make

re-identification of personal data

easier and more possible.

25,26

Thus, de-identified patient data that is shared with third-parties could be later re-identified and used in ways that the patient

may not have foreseen or expected

.

27

An increasing number of jurisdictions have considered implementing personal and biometric data regulations, such as the

General Data Protection Regulation in the European Union or the California Consumer Privacy Act

.

28

Against this backdrop, clinicians’ patients need to appreciate the risks and benefits regarding data collected through digital therapeutics; this information should be conveyed through an informed consent process.

In addition, some digital therapeutics continuously monitor patients, collecting a great amount of personal data. Further studies should evaluate the impact of pervasive surveillance on patients and the therapeutic alliance.

Bias and Fairness in Digital Therapeutics

The

COVID-19 pandemic

, as well as the recent social justice movements, have put a spotlight on

bias and inequities in the health care system

.

29,30

Due to

historical injustices experienced by Black and Latinx individuals in health care

, these groups are more likely to express concerns regarding privacy and the quality of digital mental health.

31

The shift to telehealth demonstrated that not all communities or populations have the resources or infrastructure to take advantage of digital tools. Community mental health centers, which disproportionately serve Black and Latinx patients, are less likely to have the necessary equipment.

30

If digital therapeutics are to fulfill the promise of increased access,

improvements are needed

in infrastructure, training, and availability of clinician oversight to better serve low-income demographics.

32

Associated resources, such as internet connection or hardware, may also be needed.

Machine learning

and digital health technologies also raise issues of racial bias and fairness.

33,34

There are different types of bias, such as an inadequate fit between the data collected and the research purpose, datasets that do not have representative samples of the target population, and digital tools that produce disparate effects based on how they are implemented.

35,36

If the research population for creating the tools is not sufficiently representative of the diverse contexts in which the digital therapeutics will be used, it can lead to worse outcomes for certain groups or communities. There are a number of approaches to addressing bias in digital health tools, such as technological fixes in datasets and algorithms, or outlining principles for fairness in algorithmic tools.

These are important measures, but there must be a broader effort to detect the ways social inequities can shape the development and efficacy of digital mental health tools.

37

Although digital therapeutics are regulated, it is important to note that the FDA has not required data regarding diversity in training data for machine learning. In a study of machine learning health care devices approved by the FDA, investigators found that most of the 130 tools approved did not report if they had been

evaluated at more than 1 site

, and only 17 provided demographic subgroup evaluations in their submissions.

38

Because the data collected from some digital therapeutics may also be used for health research purposes, digital tools that are of limited effectiveness or accessible to select populations could exacerbate existing health care inequalities.

Developers, researchers, and clinicians need to consider the

usability and accessibility of digital therapeutics

for culturally diverse populations and marginalized groups.

39

Digital therapeutics should be evaluated on how well their designs and implementation strategies take into account the needs of diverse populations (eg, individuals from various age groups, races, gender, linguistic backgrounds, and disability status). Engaging diverse stakeholders is vital for providing equitable mental health care and avoiding a deeper digital divide in access. Future research should inform best practices, particularly in terms of how digital therapeutics interact with the provision of mental healt

h services in real-world settings.Concluding Thoughts

Telehealth and digital therapeutics hold great promise in improving care for those with mental illness. It is, however, important that we seek to integrate digital tools into mental health care in ways that support, rather than disrupt, the therapeutic relationship and provide equitable care.

40-42

At the systems and policy levels, funding and resources are needed to provide care for different mental health needs as well as to broaden access to high-quality care for marginalized groups. Such efforts will require attention to a range of issues, including reimbursement, infrastructure, and developing appropriate care models (eg, stepped-care models).

43

Digital therapeutics

raise questions about appropriate lines of oversight or liability

; they potentially impact the nature of fiduciary relationships involved.

44

Frameworks for how digital therapeutics can address preventative care, patients in crisis, or special populations (eg, those with severe mental illness) also need to be developed and implemented. If we can meet these ethical challenges, then digital therapeutics will provide not only innovative, but also equitable mental health care.

Dr Martinez-Martin

is assistant professor at Stanford Center for Biomedical Ethics and in the Department of Pediatrics. She has a secondary appointment in the Department of Psychiatry at Stanford University’s School of Medicine.

References

1. Martinez-Martin N, Dasgupta I, Carter A, et al.

Ethics of digital mental health during COVID-19: crisis and opportunities.

JMIR Ment Health

. 2020;7(12):e23776.

2. Bauer M, Glenn T, Monteith S, et al.

Ethical perspectives on recommending digital technology for patients with mental illness.

Int J Bipolar Disord

. 2017;5(1):6.

3. Torous J, Roberts LW.

The ethical use of mobile health technology in clinical psychiatry.

J Nerv Ment Dis

. 2017;205(1):4-8.

4. Weisel KK, Fuhrmann LM, Berking M, et al.

Standalone smartphone apps for mental health-a systematic review and meta-analysis.

NPJ Digit Med

. 2019;2:118.

5. US Food and Drug Administration. Digital health software precertification (pre-cert) program. September 11, 2020. Accessed April 21, 2021.

https://www.fda.gov/medical-devices/digital-health-center-excellence/digital-health-software-precertification-pre-cert-program

6. Warren E, Murray P, Smith T. Letter to FDA on regulation of software as medical device; 2018. October 10, 2018. Accessed July 6, 2021.

https://www.warren.senate.gov/oversight/letters/warren-murray-smith-press-fda-on-oversight-of-digital-health-devices

7. Gerke S, Babic B, Evgeniou T, Cohen IG.

The need for a system view to regulate artificial intelligence/machine learning-based software as medical device.

NPJ Digit Med

. 2020;3:53.

8. Magrabi F, Ammenwerth E, McNair JB, et al.

Artificial intelligence in clinical decision support: challenges for evaluating AI and practical implications.

Yearb Med Inform

. 2019;28(1):128-134.

9. Challen R, Denny J, Pitt M, et al.

Artificial intelligence, bias and clinical safety.

BMJ Qual Saf

. 2019;28(3):231-237.

10. Obermeyer Z, Powers B, Vogeli C, Mullainathan S.

Dissecting racial bias in an algorithm used to manage the health of populations.

Science

. 2019;366(6464):447-453.

11. Amann J, Blasimme A, Vayena E, et al.

Explainability for artificial intelligence in healthcare: a multidisciplinary perspective.

BMC Med Inform Decis Mak

. 2020;20(1):310.

12. Aitken M, de St Jorre J, Pagliari C, et al.

Public responses to the sharing and linkage of health data for research purposes: a systematic review and thematic synthesis of qualitative studies.

BMC Med Ethics

. 2016;17(1):73.

13. Ralston W. They told their therapists everything. Hackers leaked it all.

Wired

. May 4, 2021. Accessed June 13, 2021.

https://www.wired.com/story/vastaamo-psychotherapy-patients-hack-data-breach

14. US Department of Health and Human Services. Notification of enforcement discretion for telehealth remote communications during the COVID-19 nationwide public health emergency. March 17, 2020. Accessed April 24, 2020.

https://www.hhs.gov/hipaa/for-professionals/special-topics/emergency-preparedness/notification-enforcement-discretion-telehealth/index.html

15. Congress.gov. Health Insurance Portability and Accountability Act of 1996. Public Law 104-191. August 21, 1996. Accessed July 15, 2021.

https://www.congress.gov/104/plaws/publ191/PLAW-104publ191.pdf

16. US Department of Health and Human Services. HITECH Act Enforcement Interim Final Rule. Reviewed June 16, 2017. Accessed July 15, 2021.

https://www.hhs.gov/hipaa/for-professionals/special-topics/hitech-act-enforcement-interim-final-rule/index.html

17. Perakslis ED.

Cybersecurity in health care.

N Engl J Med

. 2014;371(5):395-397.

18. Rothstein MA. Debate over patient privacy control in electronic health records. Hastings Center, Bioethics Forum, 2011. Updated February 5, 2014. Accessed July 6, 2021.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1764002

19. Crain M.

The limits of transparency: data brokers and commodification

.

New Media & Society

. 2018;20(1):88-104.

20. Allen M. Health insurers are vacuuming up details about you — and it could raise your rates.

ProPublica

. July 17, 2018. Accessed July 6, 2021.

https://www.propublica.org/article/health-insurers-are-vacuuming-up-details-about-you-and-it-could-raise-your-rates

21. Wachter S.

Data protection in the age of big data.

Nature Electronics

. 2019;2(1):6-7.

22. Skiljic A. The status quo of health data inferences. International Association of Privacy Professionals: Privacy Perspectives. March 19, 2021. Accessed July 6, 2021.

https://iapp.org/news/a/the-status-quo-of-health-data-inferences/

23. Kröger JL, Raschke P, Bhuiyan TR.

Privacy implications of accelerometer data: a review of possible inferences.

In:

Proceedings of the 3rd International Conference on Cryptography, Security and Privacy

. ICCSP ’19. Association for Computing Machinery; 2019:81-87.

24. De-identification of protected health information: how to anonymize PHI.

HIPAA Journal

. October 18, 2017. Accessed July 6, 2021.

https://www.hipaajournal.com/de-identification-protected-health-information/

25. Benitez K, Malin B.

Evaluating re-identification risks with respect to the HIPAA privacy rule.

J Am Med Inform Assoc.

2010;17(2):169-77.

26. Yoo JS, Thaler A, Sweeney L, Zang J. Risks to patient privacy: a re-identification of patients in Maine and Vermont statewide hospital data.

Technology Science

. October 9, 2018. Accessed July 6, 2021.

https://techscience.org/a/2018100901/

27. Culnane C, Rubinstein BIP, Teague V. Health data in an open world. Cornell University. Computer Science. December 15, 2017. Accessed July 6, 2021.

http://arxiv.org/abs/1712.05627

28. California Consumer Privacy Act of 2018 [1798.100-1798.199.100]. California Legislative Information. Updated November 3, 2020. Accessed July 15, 2021.

https://leginfo.legislature.ca.gov/faces/codes_displayText.xhtml?division=3.&part=4.&lawCode=CIV&title=1.81.5

29. Webb Hooper M, Nápoles AM, Pérez-Stable EJ.

COVID-19 and racial/ethnic disparities.

JAMA

. 2020;323(24):2466-2467.

30. van Deursen AJ.

Digital inequality during a pandemic: quantitative study of differences in COVID-19-related internet uses and outcomes among the general population.

J Med Internet Res

. 2020;22(8):e20073.

31. George S, Hamilton A, Baker RS.

How do low-income urban African Americans and Latinos feel about telemedicine? A diffusion of innovation analysis.

Int J Telemed Appl

. 2012;2012:715194.

32. Conrad R, Rayala H, Diamond R, et al. Expanding telemental health in response to the COVID-19 pandemic.

Psychiatric Times

. April 7, 2020. Accessed July 6, 2021.

https://www.psychiatrictimes.com/view/expanding-telemental-health-response-covid-19-pandemic

33. Rajkomar A, Hardt M, Howell MD, et al.

Ensuring fairness in machine learning to advance health equity.

Ann Intern Med

. 2018;169(12):866-872.

34. Gerke S, Minssen T, Cohen G.

Ethical and legal challenges of artificial intelligence-driven healthcare.

In: Bohr A, Memarzadeh K, eds.

Artificial Intelligence in Healthcare

. Elsevier;2020:295-336.

35. Char DS, Shah NH, Magnus D.

Implementing machine learning in health care-addressing ethical challenges.

N Engl J Med

. 2018;378(11):981-983.

36. Binns R. Fairness in machine learning: lessons from political philosophy. Cornell University. Computer Science. Updated March 23, 2021. Accessed July 6, 2021.

http://arxiv.org/abs/1712.03586

.

37. Mittelstadt B.

Principles alone cannot guarantee ethical AI.

Nat Mach Intell

. 2019;1(11):501-507.

38. Wu E, Wu K, Daneshjou R, et al.

How medical AI devices are evaluated: limitations and recommendations from an analysis of FDA approvals.

Nat Med

. 2021;27(4):582-584.

39. Martschenko D, Martinez-Martin N.

What about ethics in design bioethics?

Am J Bioeth

. 2021;21(6):61-63.

40. Martinez-Martin N, Dunn LB, Roberts LW.

Is it ethical to use prognostic estimates from machine learning to treat psychosis?

AMA J Ethics

. 2018;20(9):E804-811.

41. Potier R.

The digital phenotyping project: a psychoanalytical and network theory perspective.

Front Psychol

. 2020;11:1218.

42. Dagum P, Montag C.

Ethical considerations of digital phenotyping from the perspective of a healthcare practitioner.

In: Baumeister H, Montag C, eds.

Digital Phenotyping and Mobile Sensing: New Developments in Psychoinformatics.

Springer International Publishing; 2019:13-28.

43. Taylor CB, Fitzsimmons-Craft EE, Graham AK.

Digital technology can revolutionize mental health services delivery: the COVID-19 crisis as a catalyst for change.

Int J Eat Disord

. 2020;53(7):1155-1157.

44. Cohen IG, Amarasingham R, Shah A, et al.

The legal and ethical concerns that arise from using complex predictive analytics in health care.

Health Aff (Millwood)

. 2014;33(7):1139-1147.